AI tools are popping up everywhere. They can do some amazing things, but let's be honest, sometimes they just... make stuff up. Confidently wrong answers, often called "hallucinations," are a real headache, especially if you want to use AI for serious business tasks. Nobody wants a customer service bot inventing company policy!

So, how do we make AI more reliable and less likely to go off-script? Enter Retrieval-Augmented Generation, or RAG for short. Think of it as a way to give AI an "open book" before it answers your questions. It combines the AI's ability to generate human-like text with the power of looking up relevant, up-to-date information first. This simple-sounding combo makes a huge difference in accuracy.

If you're thinking about using AI for things like customer support, internal knowledge sharing, or anything where getting the facts straight matters, understanding RAG is pretty important. It's about building AI you can actually trust in the real world.

How Does RAG Actually Work? (It's Like Research Before Talking)

So, what's happening under the hood? Normally, AI models generate answers based purely on the massive amounts of text data they were trained on (which might be months or even years old!). RAG adds a crucial extra step.

When you ask a question or give a prompt to a RAG system, it doesn't just jump to generating an answer. First, it goes and retrieves relevant information from a specific set of trusted sources. This could be your company's internal knowledge base, product manuals, recent reports, specific databases – whatever information you want the AI to be grounded in. These sources are often set up in a way that makes them easy for the AI to search quickly.

Once it has fetched this relevant, current information, the AI then uses it, along with its general language skills, to generate a response.

It's essentially doing a quick research check before it "speaks." This blend means the answers aren't just based on old patterns but are informed by specific, timely facts, making them much more accurate and contextually relevant.

Stopping AI From Just Making Things Up (Fighting Hallucinations)

One of AI's biggest challenges is tackling 'hallucinations' – that whole 'making stuff up' problem where AI might create an answer that sounds perfectly reasonable but is totally wrong or even nonsensical. RAG is a direct counterattack to this issue.

Because the AI has to consult those trusted data sources before generating its answer, it's far less likely to pull incorrect information out of thin air. It grounds the AI's response in actual facts relevant to the query.

Why is this so critical? Imagine a financial advice bot giving wrong information, or a technical support AI inventing troubleshooting steps. That erodes trust fast and can cause real problems. RAG helps prevent this by forcing the AI to base its answers on reliable data you provide or point it to. It makes the AI "show its work," in a sense, leading to answers you can have much more confidence in.

Why RAG is a Game-Changer for Businesses

When you're using AI for tasks that really matter to your business, reliability isn't just nice-to-have, it's essential. This is where RAG shines.

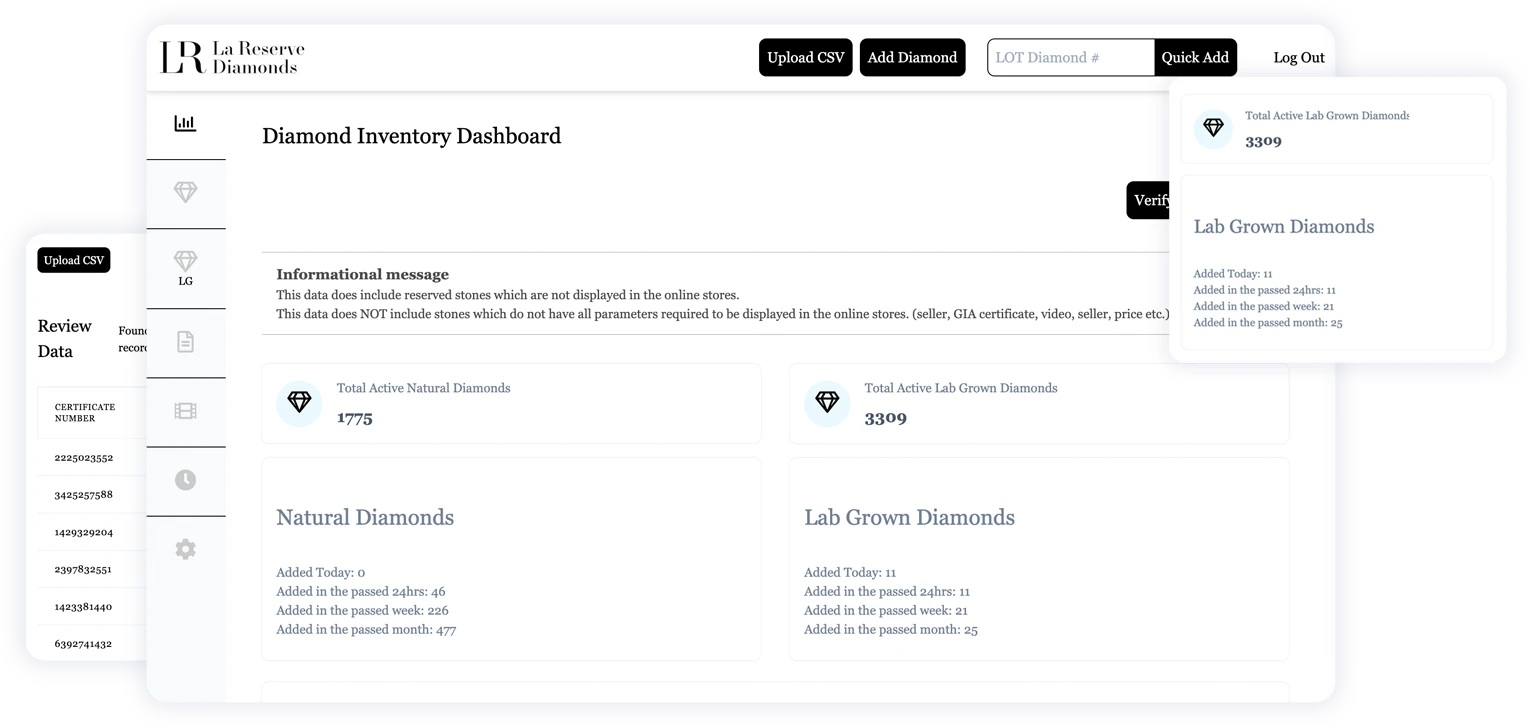

Think about customer service again. A RAG-powered chatbot can pull the very latest product specifications, pricing, or company policies directly from your internal systems when a customer asks. No more worrying if the bot is giving out yesterday's news! This leads to more accurate help, happier customers, and less risk for your business.

Because RAG grounds the AI in your specific company knowledge, it drastically reduces those embarrassing hallucinations. You get AI that can handle complex questions about your specific products or services much more accurately.

Plus, it often makes AI easier to adapt. Instead of fully retraining a massive AI model every time your information changes, you can often just update the data sources RAG checks. This saves time and money. RAG helps align powerful AI tools with the practical, fast-moving needs of a real business.

RAG in the Wild: How It's Used

Let's picture a couple of real-world scenarios:

First, imagine a supercharged website chatbot: A customer lands on your retail site and asks your chatbot, "What's the return policy for items bought on sale?" The RAG chatbot doesn't guess. It instantly checks the latest official return policy document in your knowledge base and gives the customer the correct, current answer, maybe even linking to the policy page. Clean, accurate, trustworthy.

Next, picture smarter internal help: An employee needs to know the process for submitting a new project proposal. Instead of searching through confusing folders on a shared drive, they ask an internal RAG-powered search tool. The tool pulls the precise steps and relevant links directly from the latest official process documents stored in the company's knowledge system. Fast, accurate answers mean better productivity.

Whether it's customer-facing support, internal knowledge sharing, or specialized information portals, RAG helps ensure the AI is providing consistent, reliable information based on approved sources.

RAG Is a Smarter Way to Do AI

So, RAG isn't just another piece of AI jargon. It's a practical and powerful way to make AI more dependable and useful, especially for businesses that need accuracy. By making sure AI checks its facts against real, up-to-date information, RAG tackles the hallucination problem head-on and builds trust.

It leads to AI that's not just impressively clever, but also genuinely helpful and grounded in your business reality. If you're looking to implement AI solutions where factual accuracy is key, getting familiar with RAG is definitely a step in the right direction.

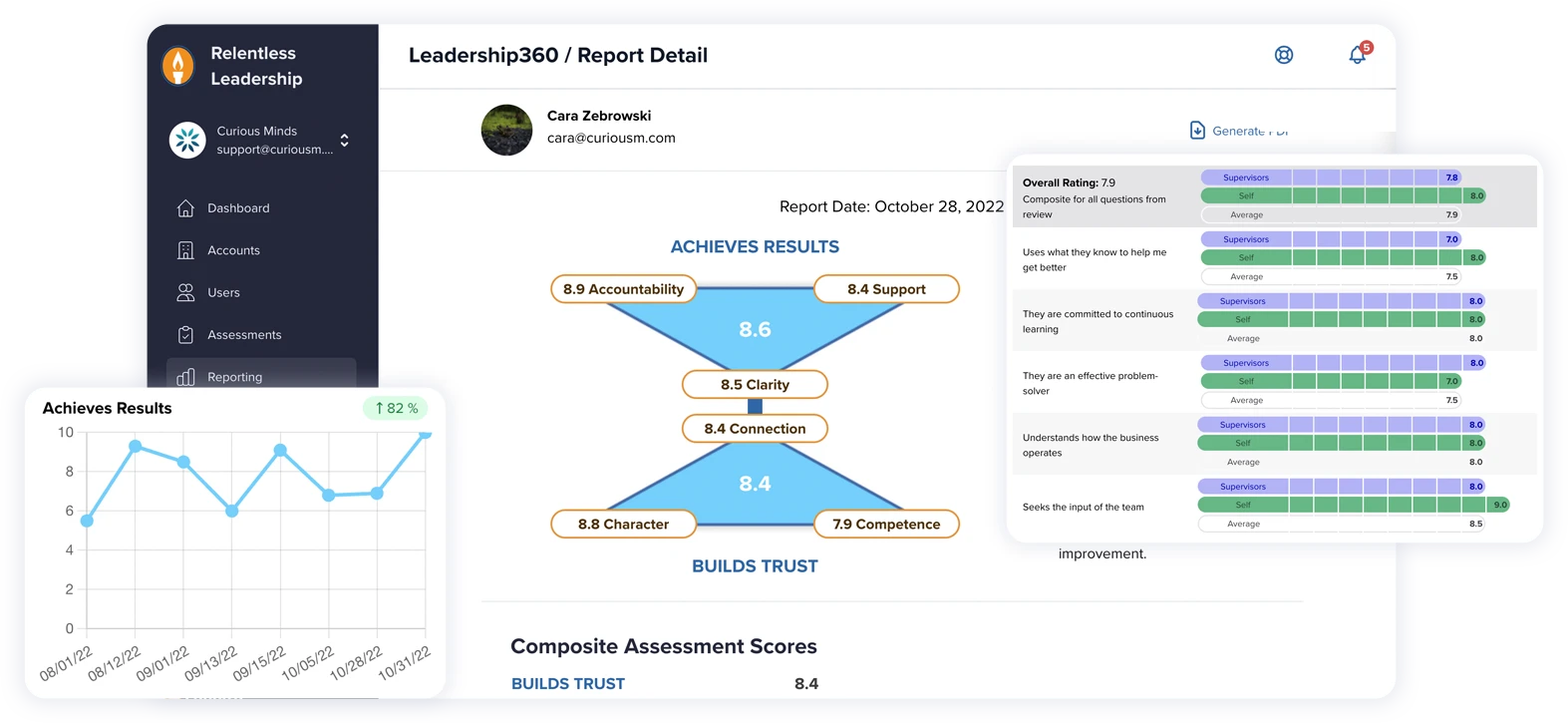

Partnering with experts like Curious Minds can provide the guidance needed to integrate this technology effectively, paving the way for AI that is not just technologically impressive, but also genuinely beneficial and supportive of business goals.